可视化基于种群的训练 (PBT) 超参数优化#

假设:读者对PBT 算法有基本了解,并希望深入探究并验证底层算法行为,尤其是在使用Ray 的 PBT 实现时。本指南提供了获取相关背景知识的资源。

基于种群的训练 (Population Based Training, PBT) 是一种强大的技术,它结合了并行搜索和序列优化,以高效地找到最优超参数。与传统的超参数调优方法不同,PBT 在训练过程中动态调整超参数,通过让多个训练运行(“trials”)共同演化,定期用表现较好的配置的扰动来替换表现较差的配置。

本教程将通过一个简单的示例,帮助您更好地理解在使用 PBT 调优算法时,其底层是如何工作的。

我们将学习如何

使用函数可训练接口设置 PBT 的检查点保存和加载

配置 Tune 和 PBT 调度器参数

可视化 PBT 算法行为以获得一些直观理解

设置示例玩具问题#

我们将使用的玩具示例优化问题来自PBT 论文(更多细节请参见图 2)。目标是找到使二次函数最大化的参数,同时只能访问一个依赖于一组超参数的估计器。一个实际的例子是,仅通过模型的经验损失(其优化依赖于超参数)来最大化模型在所有可能输入上的(未知的)泛化能力。

我们首先进行一些导入。

!pip install -q -U "ray[tune]" matplotlib

注意:本教程从名为 pbt_visualization_utils.py 的这个辅助文件中导入函数。这些函数定义了用于可视化 PBT 训练进度的绘图功能。

import numpy as np

import matplotlib.pyplot as plt

import os

import pickle

import tempfile

import ray

from ray import tune

from ray.tune.schedulers import PopulationBasedTraining

from ray.tune.tune_config import TuneConfig

from ray.tune.tuner import Tuner

from pbt_visualization_utils import (

get_init_theta,

plot_parameter_history,

plot_Q_history,

make_animation,

)

显示代码单元输出

2025-02-24 16:21:26,622 INFO util.py:154 -- Missing packages: ['ipywidgets']. Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.

2025-02-24 16:21:26,890 INFO util.py:154 -- Missing packages: ['ipywidgets']. Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.

具体来说,我们将使用论文中提供的定义(仅做了非常小的修改)来表示我们试图优化的函数和给定的估计器。

我们的目标是最大化一个二次函数 Q,但我们只能访问一个依赖于超参数的Qhat。这模拟了实际场景,即我们想要优化真正的泛化性能,但只能衡量受超参数影响的训练性能。

以下是本示例中使用的概念列表,以及它们在实际应用中的对应关系

符号 |

在本示例中 |

实际应用中的类比 |

|---|---|---|

|

模型参数,在每个训练步骤中更新。 |

神经网络参数 |

|

由 PBT 优化的超参数。 |

学习率、批量大小等。 |

|

我们 |

真实泛化能力——理论上存在但实践中无法观测。 |

|

我们实际优化的估计奖励函数;取决于超参数和模型参数。 |

训练中的经验奖励。 |

|

估计奖励函数的梯度,用于更新模型参数 |

训练中的梯度下降步骤 |

以下是代码实现。

def Q(theta):

# equation for an elliptic paraboloid with a center at (0, 0, 1.2)

return 1.2 - (3 / 4 * theta[0] ** 2 + theta[1] ** 2)

def Qhat(theta, h):

return 1.2 - (h[0] * theta[0] ** 2 + h[1] * theta[1] ** 2)

def grad_Qhat(theta, h):

theta_grad = -2 * h * theta

theta_grad[0] *= 3 / 4

h_grad = -np.square(theta)

h_grad[0] *= 3 / 4

return {"theta": theta_grad, "h": h_grad}

theta_0 = get_init_theta()

print(f"Initial parameter values: theta = {theta_0}")

Initial parameter values: theta = [0.9 0.9]

定义函数可训练接口#

我们将定义训练循环

加载超参数配置

初始化模型,如果存在检查点则从检查点恢复(这对于 PBT 很重要,因为当试验被利用时,调度器会频繁暂停和恢复试验)。

运行训练循环并保存检查点。

def train_func(config):

# Load the hyperparam config passed in by the Tuner

h0 = config.get("h0")

h1 = config.get("h1")

h = np.array([h0, h1]).astype(float)

lr = config.get("lr")

train_step = 1

checkpoint_interval = config.get("checkpoint_interval", 1)

# Initialize the model parameters

theta = get_init_theta()

# Load a checkpoint if it exists

# This checkpoint could be a trial's own checkpoint to resume,

# or another trial's checkpoint placed by PBT that we will exploit

checkpoint = tune.get_checkpoint()

if checkpoint:

with checkpoint.as_directory() as checkpoint_dir:

with open(os.path.join(checkpoint_dir, "checkpoint.pkl"), "rb") as f:

checkpoint_dict = pickle.load(f)

# Load in model (theta)

theta = checkpoint_dict["theta"]

last_step = checkpoint_dict["train_step"]

train_step = last_step + 1

# Main training loop (trial stopping is configured later)

while True:

# Perform gradient ascent steps

param_grads = grad_Qhat(theta, h)

theta_grad = np.asarray(param_grads["theta"])

theta = theta + lr * theta_grad

# Define which custom metrics we want in our trial result

result = {

"Q": Q(theta),

"theta0": theta[0],

"theta1": theta[1],

"h0": h0,

"h1": h1,

"train_step": train_step,

}

# Checkpoint every `checkpoint_interval` steps

should_checkpoint = train_step % checkpoint_interval == 0

with tempfile.TemporaryDirectory() as temp_checkpoint_dir:

checkpoint = None

if should_checkpoint:

checkpoint_dict = {

"h": h,

"train_step": train_step,

"theta": theta,

}

with open(

os.path.join(temp_checkpoint_dir, "checkpoint.pkl"), "wb"

) as f:

pickle.dump(checkpoint_dict, f)

checkpoint = tune.Checkpoint.from_directory(temp_checkpoint_dir)

# Report metric for this training iteration, and include the

# trial checkpoint that contains the current parameters if we

# saved it this train step

tune.report(result, checkpoint=checkpoint)

train_step += 1

注意

由于 PBT 会不断从最新检查点恢复,因此在函数可训练接口中正确保存和加载 train_step 非常重要。请确保您如上面 checkpoint_dict 中所示,将加载的 train_step 增加一。这可以避免重复迭代,并防止检查点和扰动间隔不同步。

配置 PBT 和 Tuner#

我们首先初始化 Ray(如果之前存在会话,则先关闭)。

if ray.is_initialized():

ray.shutdown()

ray.init()

2025-02-24 16:21:27,556 INFO worker.py:1841 -- Started a local Ray instance.

| Python 版本 | 3.11.11 |

| Ray 版本 | 2.42.1 |

创建 PBT 调度器#

perturbation_interval = 4

pbt_scheduler = PopulationBasedTraining(

time_attr="training_iteration",

perturbation_interval=perturbation_interval,

metric="Q",

mode="max",

quantile_fraction=0.5,

resample_probability=0.5,

hyperparam_mutations={

"lr": tune.qloguniform(5e-3, 1e-1, 5e-4),

"h0": tune.uniform(0.0, 1.0),

"h1": tune.uniform(0.0, 1.0),

},

synch=True,

)

关于 PBT 配置的一些说明

time_attr="training_iteration"与perturbation_interval=4结合使用,将决定试验是否应该每隔 4 个训练迭代继续或利用不同的试验。metric="Q"和mode="max"指定了试验性能的排名方式。在本例中,表现好的试验是指报告Q指标最高的试验,它们占所有试验的前 50%(由quantile_fraction=0.5设置)。请注意,我们也可以在TuneConfig中设置指标/模式。hyperparam_mutations指定了学习率lr以及额外的超参数h0,h1应该由 PBT 进行扰动,并定义了每个超参数的重采样分布(其中resample_probability=0.5表示重采样和变异都以 50% 的概率发生)。synch=True意味着 PBT 将同步运行,这会引入等待从而减慢算法速度,但为了本教程的目的,它可以产生更容易理解的可视化效果。在同步 PBT 中,我们等待所有试验都达到下一个

perturbation_interval时,才决定哪些试验应该继续,哪些试验应该暂停并从其他试验的检查点开始。在 2 个试验的情况下,这意味着每经过一个perturbation_interval,表现较差的试验就会利用表现较好的试验。异步 PBT 则不总是这样,因为试验是逐个报告结果并决定是继续还是利用。这意味着一个试验可能会判定自己是表现最佳的,并决定继续,因为其他试验尚未报告它们更好的结果。因此,我们不会在每个

perturbation_interval都看到试验进行利用。

创建 Tuner#

tuner = Tuner(

train_func,

param_space={

"lr": 0.05,

"h0": tune.grid_search([0.0, 1.0]),

"h1": tune.sample_from(lambda spec: 1.0 - spec.config["h0"]),

"num_training_iterations": 100,

# Match `checkpoint_interval` with `perturbation_interval`

"checkpoint_interval": perturbation_interval,

},

tune_config=TuneConfig(

num_samples=1,

# Set the PBT scheduler in this config

scheduler=pbt_scheduler,

),

run_config=tune.RunConfig(

stop={"training_iteration": 100},

failure_config=tune.FailureConfig(max_failures=3),

),

)

注意

我们建议将 PBT 配置中的 checkpoint_interval 与 perturbation_interval 匹配。这确保了 PBT 算法在最新的迭代中实际利用了试验。

如果您的 perturbation_interval 较大,并且希望更频繁地创建检查点,则将 perturbation_interval 设置为 checkpoint_interval 的倍数。

关于 Tuner 配置的一些其他说明

param_space指定了我们训练函数的初始 config输入。对两个值进行grid_search将启动两个具有特定超参数集的试验,PBT 将在训练过程中继续修改它们。h0和h1的初始超参数设置配置为将启动两个试验,一个使用h = [1, 0],另一个使用h = [0, 1]。这与论文中的实验相匹配,并将用于与移除 PBT 调度器的grid_search基准进行比较。

运行实验#

我们通过调用 Tuner.fit 来启动试验。

pbt_results = tuner.fit()

显示代码单元输出

Tune 状态

| 当前时间 | 2025-02-24 16:22:07 |

| 运行时间 | 00:00:39.86 |

| 内存 | 21.5/36.0 GiB |

系统信息

PopulationBasedTraining: 24 checkpoints, 24 perturbs逻辑资源使用:1.0/12 CPUs, 0/0 GPUs

试验状态

| 试验名称 | 状态 | 位置 | h0 | 迭代 | 总时间 (秒) | Q | theta0 | theta1 |

|---|---|---|---|---|---|---|---|---|

| train_func_74757_00000 | TERMINATED | 127.0.0.1:23555 | 0.89156 | 100 | 0.0432718 | 1.19993 | 0.00573655 | 0.00685687 |

| train_func_74757_00001 | TERMINATED | 127.0.0.1:23556 | 1.11445 | 100 | 0.0430496 | 1.19995 | 0.0038124 | 0.00615009 |

2025-02-24 16:21:28,081 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

2025-02-24 16:21:28,082 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

2025-02-24 16:21:29,018 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 0.243822) into trial 74757_00001 (score = 0.064403)

2025-02-24 16:21:29,018 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.05 --- (resample) --> 0.017

h0 : 0.0 --- (* 1.2) --> 0.0

h1 : 1.0 --- (resample) --> 0.2659170728716209

2025-02-24 16:21:29,795 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:30,572 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:30,579 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 0.442405) into trial 74757_00001 (score = 0.268257)

2025-02-24 16:21:30,579 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.05 --- (resample) --> 0.0345

h0 : 0.0 --- (resample) --> 0.9170235381005166

h1 : 1.0 --- (resample) --> 0.6256279739131234

2025-02-24 16:21:31,351 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:32,127 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:32,134 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 0.682806) into trial 74757_00000 (score = 0.527889)

2025-02-24 16:21:32,134 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (resample) --> 0.0305

h0 : 0.9170235381005166 --- (* 1.2) --> 1.1004282457206198

h1 : 0.6256279739131234 --- (resample) --> 0.027475735413096558

2025-02-24 16:21:32,921 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:33,706 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:33,713 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 0.846848) into trial 74757_00000 (score = 0.823588)

2025-02-24 16:21:33,713 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (* 0.8) --> 0.027600000000000003

h0 : 0.9170235381005166 --- (* 1.2) --> 1.1004282457206198

h1 : 0.6256279739131234 --- (resample) --> 0.7558831532799641

2025-02-24 16:21:34,498 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:35,346 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:35,353 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 0.958808) into trial 74757_00000 (score = 0.955140)

2025-02-24 16:21:35,353 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (* 0.8) --> 0.027600000000000003

h0 : 0.9170235381005166 --- (* 1.2) --> 1.1004282457206198

h1 : 0.6256279739131234 --- (* 1.2) --> 0.750753568695748

2025-02-24 16:21:36,193 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:36,979 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:36,986 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.035238) into trial 74757_00000 (score = 1.032648)

2025-02-24 16:21:36,986 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (* 1.2) --> 0.0414

h0 : 0.9170235381005166 --- (resample) --> 0.42270740484472435

h1 : 0.6256279739131234 --- (* 0.8) --> 0.5005023791304988

2025-02-24 16:21:37,808 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/result.json

2025-02-24 16:21:38,675 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.087423) into trial 74757_00000 (score = 1.070314)

2025-02-24 16:21:38,675 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (resample) --> 0.013000000000000001

h0 : 0.9170235381005166 --- (resample) --> 0.2667247790077112

h1 : 0.6256279739131234 --- (resample) --> 0.7464010779997918

2025-02-24 16:21:40,273 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.123062) into trial 74757_00000 (score = 1.094701)

2025-02-24 16:21:40,274 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (resample) --> 0.035

h0 : 0.9170235381005166 --- (resample) --> 0.6700641473724329

h1 : 0.6256279739131234 --- (resample) --> 0.09369892963876703

2025-02-24 16:21:42,000 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.147406) into trial 74757_00000 (score = 1.138657)

2025-02-24 16:21:42,000 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (* 0.8) --> 0.027600000000000003

h0 : 0.9170235381005166 --- (* 1.2) --> 1.1004282457206198

h1 : 0.6256279739131234 --- (resample) --> 0.4113637620174102

2025-02-24 16:21:43,617 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.164039) into trial 74757_00000 (score = 1.161962)

2025-02-24 16:21:43,618 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (* 0.8) --> 0.027600000000000003

h0 : 0.9170235381005166 --- (resample) --> 0.22455715637303986

h1 : 0.6256279739131234 --- (* 1.2) --> 0.750753568695748

2025-02-24 16:21:45,229 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.175406) into trial 74757_00000 (score = 1.168546)

2025-02-24 16:21:45,229 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (resample) --> 0.0075

h0 : 0.9170235381005166 --- (* 0.8) --> 0.7336188304804133

h1 : 0.6256279739131234 --- (* 1.2) --> 0.750753568695748

2025-02-24 16:21:46,822 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.183176) into trial 74757_00000 (score = 1.177124)

2025-02-24 16:21:46,823 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (resample) --> 0.016

h0 : 0.9170235381005166 --- (resample) --> 0.9850746699152328

h1 : 0.6256279739131234 --- (resample) --> 0.6345079222898454

2025-02-24 16:21:48,411 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.188488) into trial 74757_00000 (score = 1.186006)

2025-02-24 16:21:48,411 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0345 --- (resample) --> 0.0545

h0 : 0.9170235381005166 --- (resample) --> 0.644936448785508

h1 : 0.6256279739131234 --- (resample) --> 0.47452815582611396

2025-02-24 16:21:49,978 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.192519) into trial 74757_00001 (score = 1.192121)

2025-02-24 16:21:49,978 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (resample) --> 0.006500000000000001

h0 : 0.644936448785508 --- (* 0.8) --> 0.5159491590284064

h1 : 0.47452815582611396 --- (resample) --> 0.20892073190112748

2025-02-24 16:21:51,547 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.195139) into trial 74757_00001 (score = 1.192779)

2025-02-24 16:21:51,548 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (resample) --> 0.0405

h0 : 0.644936448785508 --- (* 0.8) --> 0.5159491590284064

h1 : 0.47452815582611396 --- (* 0.8) --> 0.3796225246608912

2025-02-24 16:21:53,193 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.196841) into trial 74757_00001 (score = 1.196227)

2025-02-24 16:21:53,194 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (resample) --> 0.043000000000000003

h0 : 0.644936448785508 --- (resample) --> 0.8612751379606769

h1 : 0.47452815582611396 --- (resample) --> 0.008234170890763504

2025-02-24 16:21:54,799 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.197947) into trial 74757_00001 (score = 1.197688)

2025-02-24 16:21:54,799 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (* 1.2) --> 0.0654

h0 : 0.644936448785508 --- (resample) --> 0.2636264337170955

h1 : 0.47452815582611396 --- (* 0.8) --> 0.3796225246608912

2025-02-24 16:21:56,428 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.198666) into trial 74757_00001 (score = 1.198417)

2025-02-24 16:21:56,429 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (resample) --> 0.0445

h0 : 0.644936448785508 --- (* 0.8) --> 0.5159491590284064

h1 : 0.47452815582611396 --- (resample) --> 0.4078642041684053

2025-02-24 16:21:58,033 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.199133) into trial 74757_00001 (score = 1.198996)

2025-02-24 16:21:58,033 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (resample) --> 0.0085

h0 : 0.644936448785508 --- (resample) --> 0.21841880940819025

h1 : 0.47452815582611396 --- (* 0.8) --> 0.3796225246608912

2025-02-24 16:21:59,690 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.199437) into trial 74757_00001 (score = 1.199159)

2025-02-24 16:21:59,690 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.0545 --- (* 1.2) --> 0.0654

h0 : 0.644936448785508 --- (* 1.2) --> 0.7739237385426097

h1 : 0.47452815582611396 --- (resample) --> 0.15770319740458727

2025-02-24 16:22:01,361 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.199651) into trial 74757_00000 (score = 1.199634)

2025-02-24 16:22:01,362 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.0654 --- (* 0.8) --> 0.052320000000000005

h0 : 0.7739237385426097 --- (* 1.2) --> 0.9287084862511316

h1 : 0.15770319740458727 --- (resample) --> 0.4279796053289977

2025-02-24 16:22:03,081 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.199790) into trial 74757_00001 (score = 1.199772)

2025-02-24 16:22:03,082 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.052320000000000005 --- (* 0.8) --> 0.041856000000000004

h0 : 0.9287084862511316 --- (resample) --> 0.579167003721271

h1 : 0.4279796053289977 --- (* 1.2) --> 0.5135755263947972

2025-02-24 16:22:04,698 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00000 (score = 1.199872) into trial 74757_00001 (score = 1.199847)

2025-02-24 16:22:04,699 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00001:

lr : 0.052320000000000005 --- (* 1.2) --> 0.062784

h0 : 0.9287084862511316 --- (* 1.2) --> 1.1144501835013578

h1 : 0.4279796053289977 --- (resample) --> 0.25894972559062557

2025-02-24 16:22:06,309 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 74757_00001 (score = 1.199924) into trial 74757_00000 (score = 1.199920)

2025-02-24 16:22:06,310 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial74757_00000:

lr : 0.062784 --- (resample) --> 0.006500000000000001

h0 : 1.1144501835013578 --- (* 0.8) --> 0.8915601468010863

h1 : 0.25894972559062557 --- (resample) --> 0.4494584110928429

2025-02-24 16:22:07,944 INFO tune.py:1009 -- Wrote the latest version of all result files and experiment state to '/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28' in 0.0049s.

2025-02-24 16:22:07,946 INFO tune.py:1041 -- Total run time: 39.88 seconds (39.86 seconds for the tuning loop).

(train_func pid=23370) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000000)

(train_func pid=23377) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000000)

(train_func pid=23397) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000004) [repeated 8x across cluster] (Ray deduplicates logs by default. Set RAY_DEDUP_LOGS=0 to disable log deduplication, or see https://docs.rayai.org.cn/en/master/ray-observability/user-guides/configure-logging.html#log-deduplication for more options.)

(train_func pid=23398) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000001) [repeated 7x across cluster]

(train_func pid=23428) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000005) [repeated 7x across cluster]

(train_func pid=23428) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000004) [repeated 6x across cluster]

(train_func pid=23453) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000011) [repeated 7x across cluster]

(train_func pid=23453) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000008) [repeated 7x across cluster]

(train_func pid=23478) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000014) [repeated 6x across cluster]

(train_func pid=23479) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000013) [repeated 7x across cluster]

(train_func pid=23509) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000018) [repeated 8x across cluster]

(train_func pid=23509) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000017) [repeated 7x across cluster]

(train_func pid=23530) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00000_0_h0=0.0000_2025-02-24_16-21-28/checkpoint_000021) [repeated 6x across cluster]

(train_func pid=23530) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000011) [repeated 6x across cluster]

(train_func pid=23556) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000012)

(train_func pid=23556) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-21-28/train_func_74757_00001_1_h0=1.0000_2025-02-24_16-21-28/checkpoint_000013)

可视化结果#

使用此处的一些辅助函数,我们可以创建一些可视化图表,帮助我们理解 PBT 的训练进程。

fig, axs = plt.subplots(1, 2, figsize=(13, 6), gridspec_kw=dict(width_ratios=[1.5, 1]))

colors = ["red", "black"]

labels = ["h = [1, 0]", "h = [0, 1]"]

plot_parameter_history(

pbt_results,

colors,

labels,

perturbation_interval=perturbation_interval,

fig=fig,

ax=axs[0],

)

plot_Q_history(pbt_results, colors, labels, ax=axs[1])

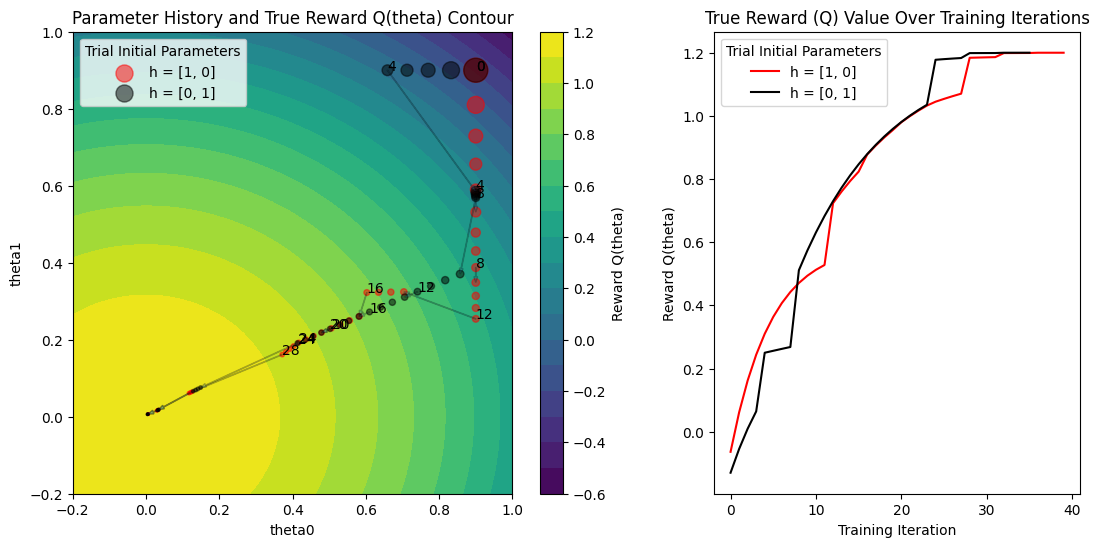

右边的图显示了两个试验的真实函数值 Q(theta) 随训练进度变化的情况。两个试验都达到了最大值 1.2。这表明 PBT 具有找到最优解的能力,无论初始超参数配置如何。

以下是左边图的解读方式

左边的图显示了两个试验在每个训练迭代中的参数值

(theta0, theta1)。随着训练迭代次数增加,点的大小会变小。我们看到每隔

perturbation_interval个训练迭代,点旁边会显示迭代次数作为标签。让我们放大查看两个试验从迭代 4 到 5 的过渡。我们看到试验会发生以下两种情况:要么继续(参见红色试验从迭代 4 到 5 只是继续训练),要么利用并扰动另一个试验,然后执行一个训练步骤(参见黑色试验从迭代 4 到 5 跳到了红色试验的参数值)。

由于 PBT 的利用和探索步骤改变了超参数,红色试验在此步骤的梯度方向也发生了变化。请记住,估计器

Qhat的梯度依赖于超参数(h0, h1)。训练迭代之间跳跃大小的变化表明学习率也在变化,因为我们将

lr包含在了要变异的超参数集合中。

动画演示训练进程#

make_animation(

pbt_results,

colors,

labels,

perturbation_interval=perturbation_interval,

filename="pbt.gif",

)

我们还可以将训练过程制作成动画,以查看模型参数在每一步发生的变化。动画显示了

参数在训练期间如何在空间中移动

何时发生利用(参数空间中的跳跃)

超参数扰动后梯度方向如何变化

两个试验最终都收敛到最优参数区域

网格搜索比较#

论文中包含了一个与 2 个试验的网格搜索的比较,使用了与 PBT 实验相同的初始超参数配置 (h = [1, 0], h = [0, 1])。下面代码中唯一的区别是移除了 TuneConfig 中的 PBT 调度器。

if ray.is_initialized():

ray.shutdown()

ray.init()

tuner = Tuner(

train_func,

param_space={

"lr": tune.qloguniform(1e-2, 1e-1, 5e-3),

"h0": tune.grid_search([0.0, 1.0]),

"h1": tune.sample_from(lambda spec: 1.0 - spec.config["h0"]),

},

tune_config=tune.TuneConfig(

num_samples=1,

metric="Q",

mode="max",

),

run_config=tune.RunConfig(

stop={"training_iteration": 100},

failure_config=tune.FailureConfig(max_failures=3),

),

)

grid_results = tuner.fit()

if grid_results.errors:

raise RuntimeError

显示代码单元输出

Tune 状态

| 当前时间 | 2025-02-24 16:22:18 |

| 运行时间 | 00:00:01.24 |

| 内存 | 21.5/36.0 GiB |

系统信息

使用 FIFO 调度算法。逻辑资源使用:1.0/12 CPUs, 0/0 GPUs

试验状态

| 试验名称 | 状态 | 位置 | h0 | lr | 迭代 | 总时间 (秒) | Q | theta0 | theta1 |

|---|---|---|---|---|---|---|---|---|---|

| train_func_91d06_00000 | TERMINATED | 127.0.0.1:23610 | 0 | 0.015 | 100 | 0.068691 | 0.590668 | 0.9 | 0.0427973 |

| train_func_91d06_00001 | TERMINATED | 127.0.0.1:23609 | 1 | 0.045 | 100 | 0.0659969 | 0.389999 | 0.000830093 | 0.9 |

2025-02-24 16:22:17,325 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

2025-02-24 16:22:17,326 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000000)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000001)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000002)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000003)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000004)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000005)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000006)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000007)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000008)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000009)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000010)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000011)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000012)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000013)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000014)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000015)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000016)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000017)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000018)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000019)

(train_func pid=23609) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17/train_func_91d06_00001_1_h0=1.0000,lr=0.0450_2025-02-24_16-22-17/checkpoint_000020)

2025-02-24 16:22:18,562 INFO tune.py:1009 -- Wrote the latest version of all result files and experiment state to '/Users/rdecal/ray_results/train_func_2025-02-24_16-22-17' in 0.0061s.

2025-02-24 16:22:18,565 INFO tune.py:1041 -- Total run time: 1.25 seconds (1.23 seconds for the tuning loop).

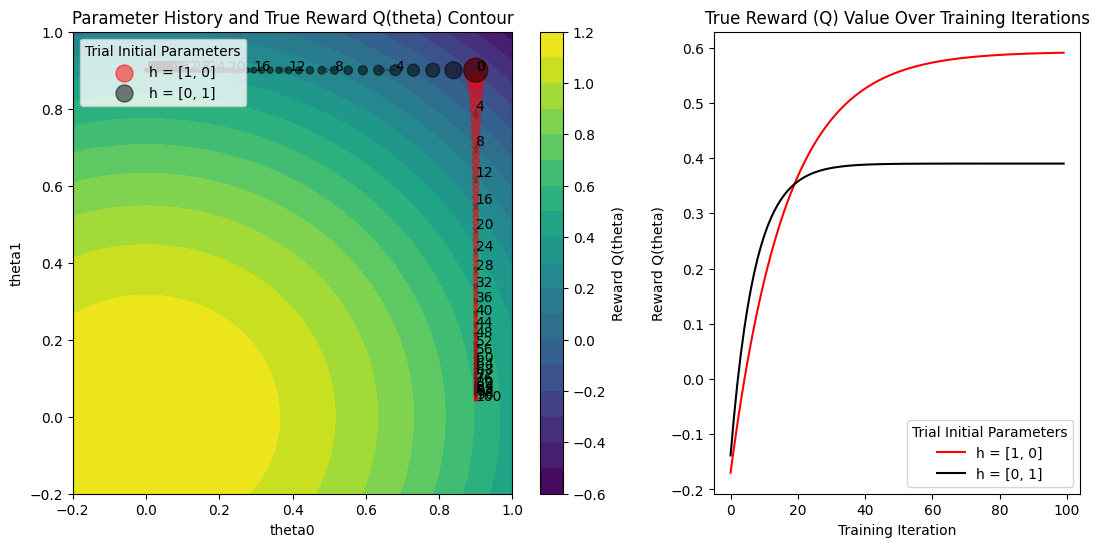

正如我们所见,由于搜索配置固定在其初始值,两个试验都没有达到最优解。这说明了 PBT 的一个关键优势:传统的超参数搜索方法(如网格搜索)在整个训练过程中保持固定的搜索值,而 PBT 可以动态调整搜索,从而在相同的计算预算下找到更好的解决方案。

fig, axs = plt.subplots(1, 2, figsize=(13, 6), gridspec_kw=dict(width_ratios=[1.5, 1]))

colors = ["red", "black"]

labels = ["h = [1, 0]", "h = [0, 1]"]

plot_parameter_history(

grid_results,

colors,

labels,

perturbation_interval=perturbation_interval,

fig=fig,

ax=axs[0],

)

plot_Q_history(grid_results, colors, labels, ax=axs[1])

将我们生成的两张图与PBT 论文中的图 2 进行比较(特别是,我们生成了左上角和右下角的图)。

增加 PBT 种群大小#

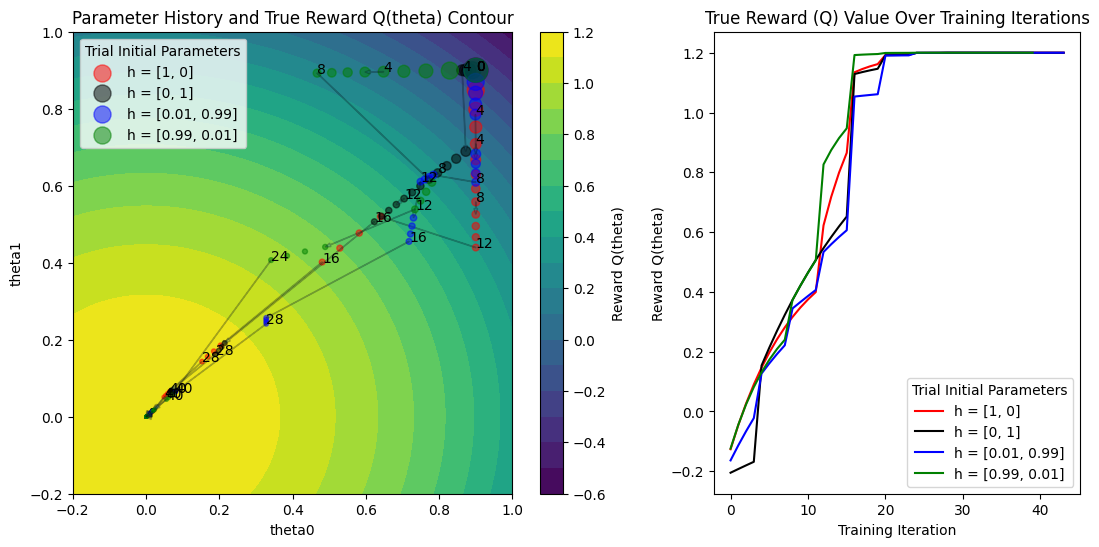

最后一个实验:如果我们增加 PBT 种群大小会是什么样子?现在,表现较差的试验将从多个表现较好的试验中抽取一个进行利用,这应该会产生一些更有趣的行为。

种群更大时

探索空间中的多样性更大

可以同时发现多个“好”的解决方案

由于试验可以从多个表现良好的配置中选择,会产生不同的利用模式

整个种群可以发展出更鲁棒的优化策略

if ray.is_initialized():

ray.shutdown()

ray.init()

perturbation_interval = 4

pbt_scheduler = PopulationBasedTraining(

time_attr="training_iteration",

perturbation_interval=perturbation_interval,

quantile_fraction=0.5,

resample_probability=0.5,

hyperparam_mutations={

"lr": tune.qloguniform(5e-3, 1e-1, 5e-4),

"h0": tune.uniform(0.0, 1.0),

"h1": tune.uniform(0.0, 1.0),

},

synch=True,

)

tuner = Tuner(

train_func,

param_space={

"lr": tune.qloguniform(5e-3, 1e-1, 5e-4),

"h0": tune.grid_search([0.0, 1.0, 0.01, 0.99]), # 4 trials

"h1": tune.sample_from(lambda spec: 1.0 - spec.config["h0"]),

"num_training_iterations": 100,

"checkpoint_interval": perturbation_interval,

},

tune_config=TuneConfig(

num_samples=1,

metric="Q",

mode="max",

# Set the PBT scheduler in this config

scheduler=pbt_scheduler,

),

run_config=tune.RunConfig(

stop={"training_iteration": 100},

failure_config=tune.FailureConfig(max_failures=3),

),

)

pbt_4_results = tuner.fit()

显示代码单元输出

Tune 状态

| 当前时间 | 2025-02-24 16:23:40 |

| 运行时间 | 00:01:18.96 |

| 内存 | 21.3/36.0 GiB |

系统信息

PopulationBasedTraining: 48 checkpoints, 48 perturbs逻辑资源使用:1.0/12 CPUs, 0/0 GPUs

试验状态

| 试验名称 | 状态 | 位置 | h0 | lr | 迭代 | 总时间 (秒) | Q | theta0 | theta1 |

|---|---|---|---|---|---|---|---|---|---|

| train_func_942f2_00000 | TERMINATED | 127.0.0.1:23974 | 0.937925 | 0.1008 | 100 | 0.0464976 | 1.2 | 2.01666e-06 | 3.7014e-06 |

| train_func_942f2_00001 | TERMINATED | 127.0.0.1:23979 | 1.18802 | 0.0995 | 100 | 0.0468764 | 1.2 | 1.74199e-06 | 2.48858e-06 |

| train_func_942f2_00002 | TERMINATED | 127.0.0.1:23981 | 1.71075 | 0.0395 | 100 | 0.0464926 | 1.2 | 2.42464e-06 | 4.55143e-06 |

| train_func_942f2_00003 | TERMINATED | 127.0.0.1:23982 | 1.42562 | 0.084 | 100 | 0.0461869 | 1.2 | 1.68403e-06 | 3.62265e-06 |

2025-02-24 16:22:21,301 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

2025-02-24 16:22:21,302 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

2025-02-24 16:22:21,303 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

2025-02-24 16:22:21,304 WARNING sample.py:469 -- sample_from functions that take a spec dict are deprecated. Please update your function to work with the config dict directly.

(train_func pid=23644) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000000)

2025-02-24 16:22:22,342 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 0.090282) into trial 942f2_00001 (score = -0.168306)

2025-02-24 16:22:22,343 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.029 --- (resample) --> 0.092

h0 : 0.0 --- (resample) --> 0.21859874791501244

h1 : 1.0 --- (resample) --> 0.14995290392498006

2025-02-24 16:22:22,343 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 0.090282) into trial 942f2_00002 (score = -0.022182)

2025-02-24 16:22:22,344 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.029 --- (* 0.8) --> 0.023200000000000002

h0 : 0.0 --- (* 0.8) --> 0.0

h1 : 1.0 --- (* 0.8) --> 0.8

2025-02-24 16:22:23,155 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/result.json

(train_func pid=23649) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000000)

2025-02-24 16:22:23,942 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/result.json

2025-02-24 16:22:24,739 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/result.json

2025-02-24 16:22:25,531 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/result.json

2025-02-24 16:22:25,539 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 0.323032) into trial 942f2_00002 (score = 0.221418)

2025-02-24 16:22:25,540 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.092 --- (resample) --> 0.0385

h0 : 0.21859874791501244 --- (* 1.2) --> 0.2623184974980149

h1 : 0.14995290392498006 --- (* 0.8) --> 0.11996232313998406

2025-02-24 16:22:25,540 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 0.323032) into trial 942f2_00003 (score = 0.239975)

2025-02-24 16:22:25,541 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.092 --- (* 1.2) --> 0.1104

h0 : 0.21859874791501244 --- (resample) --> 0.12144956368659676

h1 : 0.14995290392498006 --- (* 1.2) --> 0.17994348470997606

2025-02-24 16:22:26,332 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/result.json

2025-02-24 16:22:27,106 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/result.json

2025-02-24 16:22:27,882 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/result.json

(train_func pid=23670) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/checkpoint_000001) [repeated 10x across cluster]

2025-02-24 16:22:28,670 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/result.json

2025-02-24 16:22:28,678 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 0.506889) into trial 942f2_00000 (score = 0.399434)

2025-02-24 16:22:28,678 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.092 --- (* 0.8) --> 0.0736

h0 : 0.21859874791501244 --- (resample) --> 0.8250136748029772

h1 : 0.14995290392498006 --- (resample) --> 0.5594708426615145

2025-02-24 16:22:28,679 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00003 (score = 0.505573) into trial 942f2_00002 (score = 0.406418)

2025-02-24 16:22:28,679 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.1104 --- (resample) --> 0.025500000000000002

h0 : 0.12144956368659676 --- (* 1.2) --> 0.1457394764239161

h1 : 0.17994348470997606 --- (resample) --> 0.8083066244826129

(train_func pid=23671) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000001) [repeated 7x across cluster]

2025-02-24 16:22:29,460 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/result.json

2025-02-24 16:22:30,255 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/result.json

2025-02-24 16:22:31,035 WARNING logger.py:186 -- Remote file not found: /Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/result.json

2025-02-24 16:22:31,847 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 0.652138) into trial 942f2_00002 (score = 0.606250)

2025-02-24 16:22:31,848 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.092 --- (resample) --> 0.007

h0 : 0.21859874791501244 --- (* 0.8) --> 0.17487899833200996

h1 : 0.14995290392498006 --- (resample) --> 0.5452206891524898

2025-02-24 16:22:31,848 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 0.652138) into trial 942f2_00003 (score = 0.646607)

2025-02-24 16:22:31,849 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.092 --- (* 0.8) --> 0.0736

h0 : 0.21859874791501244 --- (resample) --> 0.007051230918609708

h1 : 0.14995290392498006 --- (* 0.8) --> 0.11996232313998406

(train_func pid=23690) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000004) [repeated 7x across cluster]

(train_func pid=23696) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000003) [repeated 7x across cluster]

2025-02-24 16:22:35,034 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.038110) into trial 942f2_00002 (score = 0.671646)

2025-02-24 16:22:35,034 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0736 --- (resample) --> 0.018000000000000002

h0 : 0.8250136748029772 --- (resample) --> 0.002064710166551409

h1 : 0.5594708426615145 --- (resample) --> 0.5725196002079377

2025-02-24 16:22:35,035 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 0.766900) into trial 942f2_00003 (score = 0.688034)

2025-02-24 16:22:35,035 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.092 --- (* 1.2) --> 0.1104

h0 : 0.21859874791501244 --- (resample) --> 0.6821981346240038

h1 : 0.14995290392498006 --- (* 0.8) --> 0.11996232313998406

2025-02-24 16:22:38,261 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.121589) into trial 942f2_00001 (score = 0.857585)

2025-02-24 16:22:38,262 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.0736 --- (* 0.8) --> 0.05888

h0 : 0.8250136748029772 --- (resample) --> 0.4514076493559237

h1 : 0.5594708426615145 --- (* 0.8) --> 0.4475766741292116

2025-02-24 16:22:38,262 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.050600) into trial 942f2_00003 (score = 0.947136)

2025-02-24 16:22:38,263 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.018000000000000002 --- (resample) --> 0.039

h0 : 0.002064710166551409 --- (* 0.8) --> 0.0016517681332411272

h1 : 0.5725196002079377 --- (* 1.2) --> 0.6870235202495252

(train_func pid=23715) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000006) [repeated 7x across cluster]

(train_func pid=23719) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000005) [repeated 7x across cluster]

2025-02-24 16:22:41,544 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.161966) into trial 942f2_00002 (score = 1.061179)

2025-02-24 16:22:41,544 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0736 --- (* 0.8) --> 0.05888

h0 : 0.8250136748029772 --- (* 0.8) --> 0.6600109398423818

h1 : 0.5594708426615145 --- (resample) --> 0.7597397486004039

2025-02-24 16:22:41,545 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.146381) into trial 942f2_00003 (score = 1.075142)

2025-02-24 16:22:41,545 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.05888 --- (resample) --> 0.022

h0 : 0.4514076493559237 --- (* 1.2) --> 0.5416891792271085

h1 : 0.4475766741292116 --- (* 0.8) --> 0.3580613393033693

2025-02-24 16:22:44,761 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.179472) into trial 942f2_00003 (score = 1.153187)

2025-02-24 16:22:44,762 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.05888 --- (resample) --> 0.077

h0 : 0.6600109398423818 --- (* 1.2) --> 0.7920131278108581

h1 : 0.7597397486004039 --- (* 0.8) --> 0.6077917988803232

2025-02-24 16:22:44,762 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.179472) into trial 942f2_00001 (score = 1.163228)

2025-02-24 16:22:44,763 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.05888 --- (* 0.8) --> 0.04710400000000001

h0 : 0.6600109398423818 --- (resample) --> 0.9912816837768351

h1 : 0.7597397486004039 --- (resample) --> 0.14906117271353014

(train_func pid=23743) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/checkpoint_000002) [repeated 7x across cluster]

(train_func pid=23748) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000007) [repeated 7x across cluster]

2025-02-24 16:22:47,992 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.191012) into trial 942f2_00001 (score = 1.185283)

2025-02-24 16:22:47,993 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.0736 --- (resample) --> 0.017

h0 : 0.8250136748029772 --- (* 1.2) --> 0.9900164097635725

h1 : 0.5594708426615145 --- (resample) --> 0.8982838603244675

2025-02-24 16:22:47,994 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00003 (score = 1.190555) into trial 942f2_00002 (score = 1.188719)

2025-02-24 16:22:47,994 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.077 --- (resample) --> 0.008

h0 : 0.7920131278108581 --- (resample) --> 0.6807322169820972

h1 : 0.6077917988803232 --- (* 0.8) --> 0.4862334391042586

(train_func pid=23768) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/checkpoint_000008) [repeated 7x across cluster]

2025-02-24 16:22:51,175 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.195622) into trial 942f2_00002 (score = 1.191142)

2025-02-24 16:22:51,175 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0736 --- (resample) --> 0.0205

h0 : 0.8250136748029772 --- (* 1.2) --> 0.9900164097635725

h1 : 0.5594708426615145 --- (resample) --> 0.6233012271154452

2025-02-24 16:22:51,176 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.195622) into trial 942f2_00001 (score = 1.192855)

2025-02-24 16:22:51,177 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.0736 --- (* 0.8) --> 0.05888

h0 : 0.8250136748029772 --- (resample) --> 0.6776393680340219

h1 : 0.5594708426615145 --- (resample) --> 0.5972686909595455

(train_func pid=23773) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/checkpoint_000002) [repeated 7x across cluster]

2025-02-24 16:22:54,409 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.197864) into trial 942f2_00002 (score = 1.196497)

2025-02-24 16:22:54,410 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0736 --- (resample) --> 0.094

h0 : 0.8250136748029772 --- (* 1.2) --> 0.9900164097635725

h1 : 0.5594708426615145 --- (resample) --> 0.916496614878753

2025-02-24 16:22:54,411 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00003 (score = 1.198000) into trial 942f2_00001 (score = 1.197464)

2025-02-24 16:22:54,411 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.077 --- (resample) --> 0.009000000000000001

h0 : 0.7920131278108581 --- (resample) --> 0.09724457530695019

h1 : 0.6077917988803232 --- (* 0.8) --> 0.4862334391042586

(train_func pid=23796) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000011) [repeated 7x across cluster]

(train_func pid=23801) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000010) [repeated 7x across cluster]

2025-02-24 16:22:57,678 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199463) into trial 942f2_00001 (score = 1.198073)

2025-02-24 16:22:57,678 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.094 --- (resample) --> 0.011

h0 : 0.9900164097635725 --- (* 1.2) --> 1.188019691716287

h1 : 0.916496614878753 --- (resample) --> 0.854735155913485

2025-02-24 16:22:57,679 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00003 (score = 1.199079) into trial 942f2_00000 (score = 1.198957)

2025-02-24 16:22:57,679 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.077 --- (* 1.2) --> 0.0924

h0 : 0.7920131278108581 --- (resample) --> 0.8783500284482123

h1 : 0.6077917988803232 --- (* 1.2) --> 0.7293501586563879

2025-02-24 16:23:00,836 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199862) into trial 942f2_00001 (score = 1.199540)

2025-02-24 16:23:00,836 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.094 --- (* 0.8) --> 0.0752

h0 : 0.9900164097635725 --- (resample) --> 0.06185563216172696

h1 : 0.916496614878753 --- (resample) --> 0.06868522206070948

2025-02-24 16:23:00,837 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199862) into trial 942f2_00003 (score = 1.199576)

2025-02-24 16:23:00,837 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.094 --- (* 1.2) --> 0.1128

h0 : 0.9900164097635725 --- (resample) --> 0.3672068732350573

h1 : 0.916496614878753 --- (resample) --> 0.3263725487154706

(train_func pid=23821) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000013) [repeated 7x across cluster]

(train_func pid=23822) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/checkpoint_000011) [repeated 7x across cluster]

2025-02-24 16:23:04,072 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199964) into trial 942f2_00001 (score = 1.199871)

2025-02-24 16:23:04,073 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.094 --- (* 0.8) --> 0.0752

h0 : 0.9900164097635725 --- (resample) --> 0.8143417145384867

h1 : 0.916496614878753 --- (* 1.2) --> 1.0997959378545035

2025-02-24 16:23:04,073 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199964) into trial 942f2_00000 (score = 1.199896)

2025-02-24 16:23:04,074 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.094 --- (* 0.8) --> 0.0752

h0 : 0.9900164097635725 --- (resample) --> 0.28845453300169044

h1 : 0.916496614878753 --- (resample) --> 0.02235127072371279

2025-02-24 16:23:07,516 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.199986) into trial 942f2_00003 (score = 1.199955)

2025-02-24 16:23:07,516 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.0752 --- (* 0.8) --> 0.060160000000000005

h0 : 0.8143417145384867 --- (* 1.2) --> 0.9772100574461839

h1 : 1.0997959378545035 --- (* 0.8) --> 0.8798367502836029

2025-02-24 16:23:07,517 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.199986) into trial 942f2_00000 (score = 1.199969)

2025-02-24 16:23:07,517 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.0752 --- (resample) --> 0.0155

h0 : 0.8143417145384867 --- (* 1.2) --> 0.9772100574461839

h1 : 1.0997959378545035 --- (* 0.8) --> 0.8798367502836029

(train_func pid=23846) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/checkpoint_000007) [repeated 7x across cluster]

(train_func pid=23846) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/checkpoint_000006) [repeated 6x across cluster]

2025-02-24 16:23:10,721 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.199994) into trial 942f2_00000 (score = 1.199989)

2025-02-24 16:23:10,722 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.0752 --- (resample) --> 0.005

h0 : 0.8143417145384867 --- (resample) --> 0.14093804696635504

h1 : 1.0997959378545035 --- (resample) --> 0.04714342092680601

2025-02-24 16:23:10,723 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199997) into trial 942f2_00003 (score = 1.199994)

2025-02-24 16:23:10,723 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.094 --- (* 0.8) --> 0.0752

h0 : 0.9900164097635725 --- (resample) --> 0.4368194817950344

h1 : 0.916496614878753 --- (resample) --> 0.7095403843032826

(train_func pid=23867) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/checkpoint_000015) [repeated 7x across cluster]

(train_func pid=23867) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/checkpoint_000014) [repeated 7x across cluster]

2025-02-24 16:23:13,989 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199999) into trial 942f2_00000 (score = 1.199994)

2025-02-24 16:23:13,989 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.094 --- (resample) --> 0.0925

h0 : 0.9900164097635725 --- (resample) --> 0.998683166515384

h1 : 0.916496614878753 --- (* 1.2) --> 1.0997959378545035

2025-02-24 16:23:13,990 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.199999) into trial 942f2_00001 (score = 1.199998)

2025-02-24 16:23:13,990 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00001:

lr : 0.094 --- (resample) --> 0.0995

h0 : 0.9900164097635725 --- (* 1.2) --> 1.188019691716287

h1 : 0.916496614878753 --- (* 0.8) --> 0.7331972919030024

2025-02-24 16:23:17,224 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.200000) into trial 942f2_00003 (score = 1.199999)

2025-02-24 16:23:17,224 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.0925 --- (resample) --> 0.006500000000000001

h0 : 0.998683166515384 --- (* 0.8) --> 0.7989465332123072

h1 : 1.0997959378545035 --- (* 0.8) --> 0.8798367502836029

2025-02-24 16:23:17,225 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.200000) into trial 942f2_00002 (score = 1.200000)

2025-02-24 16:23:17,225 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0995 --- (* 0.8) --> 0.0796

h0 : 1.188019691716287 --- (* 0.8) --> 0.9504157533730297

h1 : 0.7331972919030024 --- (* 0.8) --> 0.586557833522402

(train_func pid=23892) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000018) [repeated 7x across cluster]

(train_func pid=23892) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000017) [repeated 7x across cluster]

2025-02-24 16:23:20,513 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.200000) into trial 942f2_00003 (score = 1.200000)

2025-02-24 16:23:20,514 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.0995 --- (resample) --> 0.0325

h0 : 1.188019691716287 --- (* 0.8) --> 0.9504157533730297

h1 : 0.7331972919030024 --- (resample) --> 0.19444236619090172

2025-02-24 16:23:20,515 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.200000) into trial 942f2_00002 (score = 1.200000)

2025-02-24 16:23:20,515 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0925 --- (* 0.8) --> 0.074

h0 : 0.998683166515384 --- (* 1.2) --> 1.1984197998184607

h1 : 1.0997959378545035 --- (resample) --> 0.6632564869583678

2025-02-24 16:23:23,779 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00000 (score = 1.200000) into trial 942f2_00003 (score = 1.200000)

2025-02-24 16:23:23,779 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.0925 --- (resample) --> 0.0205

h0 : 0.998683166515384 --- (* 0.8) --> 0.7989465332123072

h1 : 1.0997959378545035 --- (* 1.2) --> 1.319755125425404

2025-02-24 16:23:23,780 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.200000) into trial 942f2_00002 (score = 1.200000)

2025-02-24 16:23:23,780 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0995 --- (resample) --> 0.059500000000000004

h0 : 1.188019691716287 --- (* 1.2) --> 1.4256236300595444

h1 : 0.7331972919030024 --- (resample) --> 0.19309431415014977

(train_func pid=23917) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000020) [repeated 7x across cluster]

(train_func pid=23917) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00000_0_h0=0.0000,lr=0.0290_2025-02-24_16-22-21/checkpoint_000019) [repeated 7x across cluster]

2025-02-24 16:23:27,089 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.200000) into trial 942f2_00003 (score = 1.200000)

2025-02-24 16:23:27,090 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.059500000000000004 --- (* 0.8) --> 0.0476

h0 : 1.4256236300595444 --- (* 0.8) --> 1.1404989040476357

h1 : 0.19309431415014977 --- (* 0.8) --> 0.15447545132011983

2025-02-24 16:23:27,090 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.200000) into trial 942f2_00000 (score = 1.200000)

2025-02-24 16:23:27,091 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.059500000000000004 --- (resample) --> 0.051000000000000004

h0 : 1.4256236300595444 --- (resample) --> 0.5322491694545954

h1 : 0.19309431415014977 --- (resample) --> 0.4907896898235511

2025-02-24 16:23:30,403 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.200000) into trial 942f2_00003 (score = 1.200000)

2025-02-24 16:23:30,403 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00003:

lr : 0.0995 --- (resample) --> 0.084

h0 : 1.188019691716287 --- (* 1.2) --> 1.4256236300595444

h1 : 0.7331972919030024 --- (resample) --> 0.7068936194953941

2025-02-24 16:23:30,404 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00002 (score = 1.200000) into trial 942f2_00000 (score = 1.200000)

2025-02-24 16:23:30,404 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.059500000000000004 --- (resample) --> 0.041

h0 : 1.4256236300595444 --- (* 1.2) --> 1.7107483560714531

h1 : 0.19309431415014977 --- (resample) --> 0.6301738678453057

(train_func pid=23942) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00003_3_h0=0.9900,lr=0.0530_2025-02-24_16-22-21/checkpoint_000008) [repeated 7x across cluster]

(train_func pid=23942) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00002_2_h0=0.0100,lr=0.0170_2025-02-24_16-22-21/checkpoint_000019) [repeated 7x across cluster]

2025-02-24 16:23:33,643 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.200000) into trial 942f2_00002 (score = 1.200000)

2025-02-24 16:23:33,643 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.0995 --- (resample) --> 0.08

h0 : 1.188019691716287 --- (* 1.2) --> 1.4256236300595444

h1 : 0.7331972919030024 --- (resample) --> 0.12615387675586676

2025-02-24 16:23:33,644 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00001 (score = 1.200000) into trial 942f2_00000 (score = 1.200000)

2025-02-24 16:23:33,644 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.0995 --- (resample) --> 0.0185

h0 : 1.188019691716287 --- (* 1.2) --> 1.4256236300595444

h1 : 0.7331972919030024 --- (* 0.8) --> 0.586557833522402

(train_func pid=23962) Checkpoint successfully created at: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000023) [repeated 6x across cluster]

(train_func pid=23967) Restored on 127.0.0.1 from checkpoint: Checkpoint(filesystem=local, path=/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21/train_func_942f2_00001_1_h0=1.0000,lr=0.0070_2025-02-24_16-22-21/checkpoint_000022) [repeated 7x across cluster]

2025-02-24 16:23:36,961 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00003 (score = 1.200000) into trial 942f2_00000 (score = 1.200000)

2025-02-24 16:23:36,961 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00000:

lr : 0.084 --- (* 1.2) --> 0.1008

h0 : 1.4256236300595444 --- (resample) --> 0.9379248877817841

h1 : 0.7068936194953941 --- (* 0.8) --> 0.5655148955963153

2025-02-24 16:23:36,962 INFO pbt.py:878 --

[PopulationBasedTraining] [Exploit] Cloning trial 942f2_00003 (score = 1.200000) into trial 942f2_00002 (score = 1.200000)

2025-02-24 16:23:36,962 INFO pbt.py:905 --

[PopulationBasedTraining] [Explore] Perturbed the hyperparameter config of trial942f2_00002:

lr : 0.084 --- (resample) --> 0.0395

h0 : 1.4256236300595444 --- (* 1.2) --> 1.7107483560714531

h1 : 0.7068936194953941 --- (* 1.2) --> 0.8482723433944729

2025-02-24 16:23:40,264 INFO tune.py:1009 -- Wrote the latest version of all result files and experiment state to '/Users/rdecal/ray_results/train_func_2025-02-24_16-22-21' in 0.0086s.

2025-02-24 16:23:40,265 INFO tune.py:1041 -- Total run time: 78.97 seconds (78.95 seconds for the tuning loop).

fig, axs = plt.subplots(1, 2, figsize=(13, 6), gridspec_kw=dict(width_ratios=[1.5, 1]))

colors = ["red", "black", "blue", "green"]

labels = ["h = [1, 0]", "h = [0, 1]", "h = [0.01, 0.99]", "h = [0.99, 0.01]"]

plot_parameter_history(

pbt_4_results,

colors,

labels,

perturbation_interval=perturbation_interval,

fig=fig,

ax=axs[0],

)

plot_Q_history(pbt_4_results, colors, labels, ax=axs[1])

make_animation(

pbt_4_results,

colors,

labels,

perturbation_interval=perturbation_interval,

filename="pbt4.gif",

)

总结#

希望本指南能帮助您更好地理解 PBT 算法。在使用此 Notebook 时遇到任何问题,请提交问题,并在 Ray Slack 中提出您可能有的任何疑问